- Joined

- 13 February 2006

- Posts

- 5,286

- Reactions

- 12,186

An "optimized" system may seem complicated on paper but there is a purpose of testing many alternatives, including "overoptimized" strategies, is to discover the key success factors that drive performance. Backtesting is invaluable for exploring different possibilities before implementing it live. But while live trading demands simplicity, rigorous backtesting justifies some complexity.

The aim of testing various iterations is to discover what really drives performance, the one or two factors most correlated with profits. Then in live trading, you can pare down to a simple system that capitalises on these key success factors.This approach through backtesting many possibilities helps hone in on the simplest, most robust system for live trading. The optimized system on paper serves as a guide to refining a basic approach you can reliably follow over the long term.

Skate.

Mr Skate,

I found this post interesting. Why?

Because it somewhat addresses the issues of the 'Optimisation Paradox' and the often conflated 'Overfitting' issue.

So a definition of optimisation could be: parameter optimisation results in a system that is more likely to perform well in the future, but less likely to outperform the simulation.

Overfitting however is when the system becomes too (or overly) complex. The rules applied only effect a small number of trades that had outsized effects on the results.

The latter could apply to systems tested against short periods of historical data. Which circles back to an earlier discussion around the length of market historical data that should be used in testing a system. Obviously the correct answer is the more data the better, assuming that you want the system to trade for a long time.

Now you have used what has been described as a complex set of rules. Do you consider them (overly) complex?

The historical data is short. Too short?

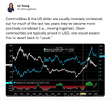

What we are talking about is: long historical data - long gaps between high volatility and average/low volatility.

Short data run: overfitted for high volatility (or whatever market condition you are looking for).

Which (I'm guessing here a bit) would mean that if you used long data runs, the system would be (more) vulnerable to periods of high volatility. Whereas if you used a short data run, say from 2020-2023, where we had that high vol. event and higher average vol. for some time, assuming a return to high(er) vol. the system would (should) perform better?

Curious.

jog on

duc