In regards to programming languages like python and reading some of the posts on this forum, I take it people seem to be experiencing problems on how to access security prices from files and perform some kind of technical analysis function on them such as a simple moving average. Furthermore, it has been made apparent that programming languages like python have no easy way of performing simple technical analysis functions on a portfolio of securities. All these python packages and examples seem rather complex, hence making people put this kind of stuff in the too hard basket. That's not good for anyone and it's certainly not good for those who want to delve into areas of data science.

I've decided to bridge the gap using python c_types and a shared library. What I have done is written a very efficient shared library of c/c++ code that allows users to read csv files (following strict format) into memory and then in a python script (or other language) iterate through the securities fetching all the corresponding prices (e.g. close) in an array. This allows the user to concentrate on the technical analysis side of things like writing simple moving average code in python, without having to worry about how the input data is stored. Hence could lead to other more advanced programming ideas and interesting stuff depending on your knowledge.

Since this problem has become such a big issue, I've decided to release the code so that people can compile it and create the shared library on not just windows but other os platforms as well such as linux. Hopefully there are no errors, but code is only as good as the people who use and test it!

A picture says a thousand words so let's start with linux. Once you have extracted the attached zip file, just follow the walk-through in the following diagram:

You will notice that after the shared object file is built, both python 2.7 and 3.4 complain that it can't find it. This is normal and can be easily rectified by editing the python script "spt.py" and adding the current working directory to "libSPT.so" in the LoadLibrary entry. To find the current working directory in linux, type "pwd" (shown at bottom of diagram). After that you should be able to successfully run the python script. If anyone has a more professional solution for loading .so's, please feel free to comment. This will do for now.

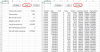

And now on to the more complicated Windows build. After installing both python and the mingw*-g++.exe compiler. To simplify things, find out where your g++ executable is located and follow the walkthrough in the following diagram for your windows os (Win32 or Win64). For Win64 build, you need to also find the file "libwinpthread-1.dll" located somewhere in your mingw installation and copy that file to where your extracted zip files are stored.

After that, you can just type "python spt.py" and it should work. No need to hard code paths as windows python is smart enough to use the current working directory for locating the shared library (.dll).

The attached zip file contains the following files:

quotes.csv - sample file containing security prices (symbol,date,open,high,low,close,volume)

spt.cpp - c++ shared library code

spt.h - c++ declarations containing functions that interface calling application with shared library code

spt.py - python script that uses shared lib to process security prices from csv files such as sample file "quotes.csv"

Note: spt = securities price tank.

Cheers,

Andrew

I've decided to bridge the gap using python c_types and a shared library. What I have done is written a very efficient shared library of c/c++ code that allows users to read csv files (following strict format) into memory and then in a python script (or other language) iterate through the securities fetching all the corresponding prices (e.g. close) in an array. This allows the user to concentrate on the technical analysis side of things like writing simple moving average code in python, without having to worry about how the input data is stored. Hence could lead to other more advanced programming ideas and interesting stuff depending on your knowledge.

Since this problem has become such a big issue, I've decided to release the code so that people can compile it and create the shared library on not just windows but other os platforms as well such as linux. Hopefully there are no errors, but code is only as good as the people who use and test it!

A picture says a thousand words so let's start with linux. Once you have extracted the attached zip file, just follow the walk-through in the following diagram:

You will notice that after the shared object file is built, both python 2.7 and 3.4 complain that it can't find it. This is normal and can be easily rectified by editing the python script "spt.py" and adding the current working directory to "libSPT.so" in the LoadLibrary entry. To find the current working directory in linux, type "pwd" (shown at bottom of diagram). After that you should be able to successfully run the python script. If anyone has a more professional solution for loading .so's, please feel free to comment. This will do for now.

And now on to the more complicated Windows build. After installing both python and the mingw*-g++.exe compiler. To simplify things, find out where your g++ executable is located and follow the walkthrough in the following diagram for your windows os (Win32 or Win64). For Win64 build, you need to also find the file "libwinpthread-1.dll" located somewhere in your mingw installation and copy that file to where your extracted zip files are stored.

After that, you can just type "python spt.py" and it should work. No need to hard code paths as windows python is smart enough to use the current working directory for locating the shared library (.dll).

The attached zip file contains the following files:

quotes.csv - sample file containing security prices (symbol,date,open,high,low,close,volume)

spt.cpp - c++ shared library code

spt.h - c++ declarations containing functions that interface calling application with shared library code

spt.py - python script that uses shared lib to process security prices from csv files such as sample file "quotes.csv"

Note: spt = securities price tank.

Cheers,

Andrew

.

.